gRPC made easy - Project Crobat

In this post, I will be going over the implementation of the gRPC API used by Project Crobat. I figured this would make a good post as gRPC is, in my opinion, very cool and the use-case employed by Project Crobat is simple enough to provide a real-world example of its usage.

Why gRPC

So, you may be wondering why I'm even writing this post at all. What is gRPC? Why should I use it? Is it worth my time? These are all perfectly valid questions, and my answer to each of them would be simply "yes". However, as I am not a highly regarded source of truth, I'll take the time to justify my answer. So without further adue, what is gRPC and why should I use it?

gRPC is an RPC framework created by google designed to allow for the simple implementation of API's as specified by a protobuf specification (more on this later). The main benefits of gRPC are as follows:

- Supports simple implementations of data streaming over HTTP/2

- Uses a binary protocol to transfer data in a concise way, thus vastly reducing the time taken to transfer data between the server and clients

- Protobuf provides an API specification that can be as a reference and allows for easy validation of messages

- The protobuf specification can be used to generate code for both a gRPC server and a client library (basically an SDK)

- From a single protobuf specification, client implementations can be automatically generated for the most popular languages

In comparison to a REST JSON API, gRPC is between 5 and 20 times faster due to it's more concise transfer format and the speed of parsing protobuf messages.

While gRPC is typically used for microservice communications, it can also be used with web applications, however, I have not tried this out yet.

Project Crobat Overview

In this post, we will be covering the implementation of a gRPC API for Project Crobat.

In short, Project Crobat is an API that indexes a massive dataset of DNS records provided by Rapid7's Project Sonar. The dataset contains approximately 1.9 billion rows of JSON totaling around 190GB of data, and as a result, some queries can return exceptionally large JSON responses. For example, a reverse DNS lookup on a /8 network.

For this reason, a gRPC API was implemented which makes use of streaming to increase result transfer speeds and reduce the resources required to satisfy the API requests both on the client, and server-side.

Building an API

Okay, now that's out of the way, onto the fun stuff. What do we need for a gRPC API then?

- protobuf API definition

- RPC server implementation

- A client to relentlessly consume the data

Before we get started on the implementation details, here you can find the full source code of Project Crobat on Github in case you want to follow along.

A word on RPC design

If after reading this blog you decide to dive in and build something using gRPC but the paradigm shift from REST leaves you a little unsure how to structure your RPC API, Google has some great docs on gRPC API design.

Protowhat?

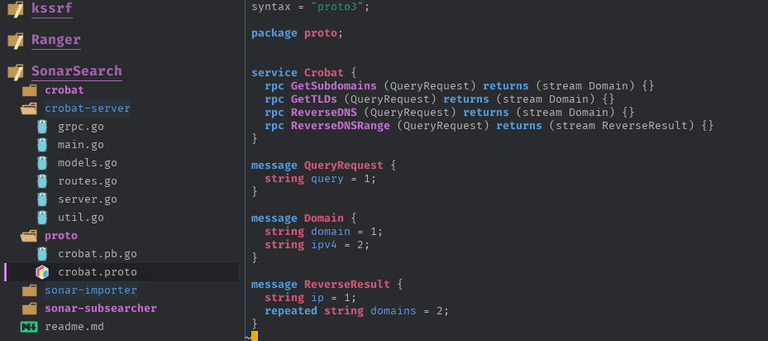

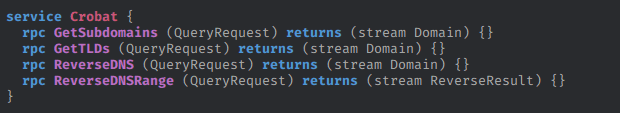

You've been going on about this protobuf thing for ages, just what is it exactly? Well, it's probably easier to show you than explain it, so take a look at the below screenshot containing the protobuf file for Project Crobat

As can be seen above, the protobuf file starts with a syntax version, and a package name before moving on to define a service.

This service is our API, the RPC endpoints within it are equivalent to our routes in a normal REST API.

Below the service definition, the message formats can be seen. These message formats define what the requests and responses used by the API will look like. While this may seem overly verbose to some of you, in languages such as Go where you'd be defining structs to deserialize JSON anyway, it really isn't too bad by comparison. Additionally, it's actually very helpful to have the format of your requests and responses all together in one file for easy viewing.

Now, back to the RPC definitions, which have the following structure

rpc FunctionName (ReqeustMessage) returns (ReplyMessage)

The first message is the message which will be passed into the RPC function when the client calls it, and the second is the message type that is returned by the endpoint. The numbers contained in these definitions are necessary, but in most cases will just be sequential, so don't worry about it too much. Finally, the repeated keyword simply indicates that the field is an array of another type, whether that be a primitive type like int or string, or another used-defined message.

The hawk-eyed among you may have noticed the stream keyword in the ReplyMessage segment of the RPC definition. This simply indicates that the endpoint returns a stream of the message in question, as opposed to a single message. The same can be done with the RequestMessage segment to indicate that the client will be streaming results to the server.

Generating code for fun and profit

Great, so now we have our protobuf specification so we can glance at it and see what our requests and responses should look like, we're done here, right?

Not quite, now that we've written our protobuf definition we can actually do something really cool with it. We can generate client and server implementations for these RPC endpoints. For the server, these take the form of interfaces or classes which can be implemented to provide RPC endpoints. For the client, this process generates an importable library that contains functions that can be called to invoke the RPCs (essentially an autogenerated SDK).

What's more is that these server and client libraries can be generated for basically all popular languages, meaning that gRPC is a great choice for microservices where different teams use different tech stacks.

To generate the Go code for the protobuf definition, the following command is run from the parent directory:

protoc -I proto proto/crobat.proto --go_out=plugins=grpc:proto

In this command, we specify the directory the protobuf file is in, along with the location of the protobuf file itself (don't ask me why). Next, we specify which language we want to generate the code for, as well as the plugin options.

The above command generates this crobat.pb.go file which is then used to implement a server or construct a client.

Streaming endpoints

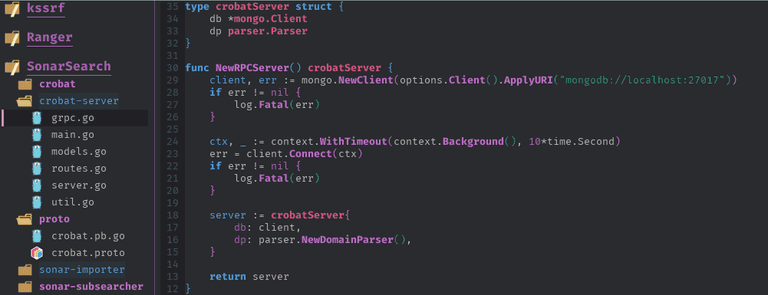

Cool, so now let's take a look at the implementation of a gRPC server with streaming endpoints. First, we need to create a gRPC server type. For Project Crobat, the server creation looks like this:

However, in a simple implementation, none of these struct fields would be required, nor a factory function.

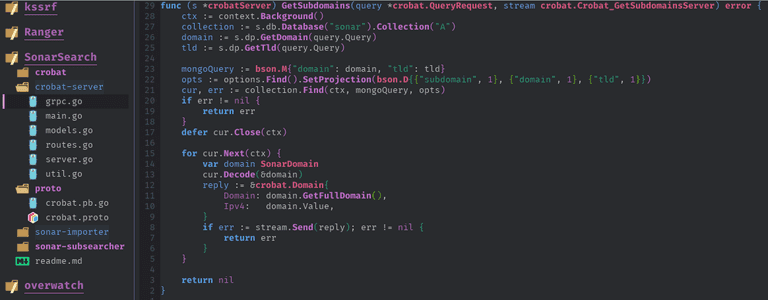

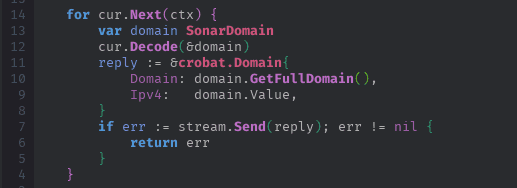

Now we can take a look at the implementation of one of these RPC calls which hangs off the Server type in the form of a method.

The most notable thing about the above screenshot is the method signature. First, we have the name GetSubdomains which must match the one specified in the protobuf spec. Then, we take a parameter of type crobat.QueryRequest, which is the same as the message we defined in our protobuf definition. Finally, we take a stream parameter which is the stream we will send the results of the query over. This type of stream parameter will always take the format ServiceName_RPCFunctionServer.

If that went a bit over your head, I'd recommend checking out the gRPC docs in your native programming language.

The next handful of lines in the above example are just handling the request, however, one thing to note is that the usage of query.Query. As we have defined the message format, the data given to our function has already been deserialized and can be accessed via dot notation, which is nice.

Things start to get interesting again once we hit the for loop, for this is where we start using the stream.

One amazing thing about streaming endpoints is that cursor objects used to read database queries are typically also a type of stream in that you read objects out one by one. When using non-streaming API's, you would have to collect all the rows from the query, hold them in memory, serialize them, and send them off to the client. Depending on the result size this can require a lot of RAM and may require pagination which in itself can have performance overheads.

In this example, we are able to create a new Domain message (as defined in the protobuf spec), and send it off to the client through the stream for each row read from the database. Handing off data like this keeps the memory usage of the request pretty static regardless of the size of the result set, which is a lifesaver for something such as Project Crobat.

To send the newly created Domain message to the client, we simply call stream.Send(DomainMessage). Can't get much easier than that.

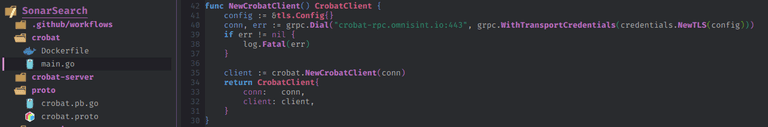

Building a client

Well, we've got a gRPC server now, which is great but what good is that without a client to consume it? Fortunately, building a client is incredibly simple thanks to the code generation we performed during previous steps. First things first, we're going to need to create a client as shown in the below code snippet.

Note that we are passing an empty instance of tls.Config{} as transport credentials. This is because our API does not require authentication, and this may be different depending on your implementation. The additional lines where we return a CrobatClient is simply returning a struct so that we can define our own methods that hang off it if needed.

Now that we have a client, we have to make calls to the API. This is done using the autogenerated functions which have the same name as the endpoints defined in your protobuf file. For Project Crobat, we have the following RPC endpoints defined:

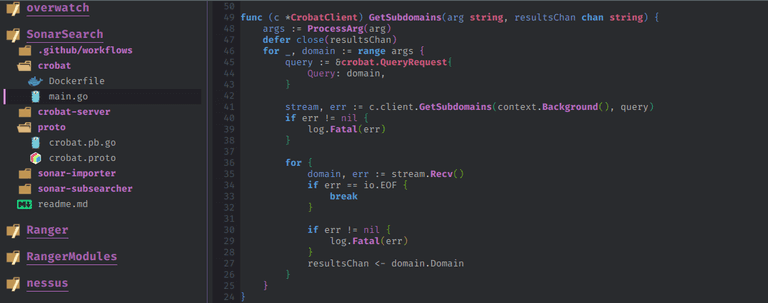

Thus, if we want to retrieve a list of subdomains, we will be calling the generated client method GetSubdomains.

Consuming streams

As the GetSubdomains endpoints is a streaming endpoint, we need to know how to consume the data sent over the stream. First, we create a stream instance by calling client.GetSubdomains while passing it an instance of QueryRequest, a message type defined in our protobuf file.

We then enter an infinite loop which will read from the stream with error handling in place to break out of the loop if an EOF exception is encountered. In Project Crobat, the implementation of this endpoint is shown below, however, note that there is some additional stuff going on due to the way the stream is used and the general structure of the application:

As can be seen above, the code used to interact with the gRPC API is extremely minimal due to the code generation performed by protoc.

Cross-language implementation

Ok, so now we have a functional gRPC server as well as a functional client that can retrieve subdomains from the API. One of the great things about gRPC is that you can employ code generation to generate code in many different languages which can be used to implement servers or clients for the defined gRPC API structure.

For example, let's say you're writing a Python script and you'd like to interact with the streaming endpoints provided by Project Crobats API. First, we need to install the required dependencies:

pip install grpcio grpc-tools

Then, we simply need to acquire a copy of the protobuf definition for the Crobat API, and then run the following command:

python -m grpc_tools.protoc -I=proto --python_out=. --grpc_python_out=. proto/crobat.proto

The above command generates a Python library that can be used to talk to the gRPC API. Once that is complete, we can implement a simple client to retrieve subdomains from the Project Crobat API. The full code required to do this can be seen below:

import crobat_pb2

import crobat_pb2_grpc

import grpc

import sys

# Create a channel with blank ssl credentials

channel = grpc.secure_channel("crobat-rpc.omnisint.io:443", grpc.ssl_channel_credentials())

# Create a client, passing the channel

client = crobat_pb2_grpc.CrobatStub(channel)

# Create a query, setting the query as first cli arg

query = crobat_pb2.QueryRequest()

query.query = sys.argv[1]

# Iterate over stream, printing subdomains

for subdomain in client.GetSubdomains(query):

print(subdomain.domain)

Conclusion

This blog has covered the basics of creating a fast, multi-language API using gRPC. While this blog focussed primarily on streaming endpoints, gRPC also supports unary endpoints (standard, non-streaming requests), as well as client-side streaming, and bi-directional streaming endpoints. These are all pretty straightforward to implement, so I recommend checking out the gRPC Docs if you're interested.

Additionally, it is possible to build gRPC API's which can be transcoded to JSON to allow languages that do not support gRPC to interact with them. This would typically be used within browser contexts, however, there is now pretty decent support for interacting with gRPC endpoints directly via code generation provided by gRPC-Web.

I have personally used gRPC-Web for a project and found it to work quite well, although you do lose some of the performance benefits provided by gRPC as gRPC web doesn't fully support end to end streaming (a proxy handles the streaming and collates it into one big response which is then sent to the browser).

If you've made it this far, thanks for reading, and I hope this has helped you gain an understanding of how to work with gRPC.